Qiaolin Wang

qw2443@columbia.edu | Columbia EE | CV

I am Qiaolin Wang, a second-year Master’s student in Electrical Engineering at Columbia University, advised by Professor Nima Mesgarani.

I am passionate about building models that can Preceive, Reason, and Speak as naturally as humans do. My current research investigates the fundamental capabilities of Large Audio Language Models (LALMs), from their internal representations about syntax and context, to their capacity for complex reasoning across modalities. My future work aims to advance this by pioneering Audio-Visual Understanding and unified models for Reasoning and Generation.

Before joining Columbia, I earned my B.Eng. in Computer Science from Wuhan University. I also had an enriching experience as a Research Intern at Wiz.AI, where I developed a SOTA Speech Emotion Recognition LLM.

News

| Nov 10, 2025 | 🏆 Our paper was awarded EMNLP SAC Highlight! |

|---|---|

| Nov 07, 2025 | 🎤 I will be presenting our work at the SANE 2025 workshop at Google NYC! |

| Sep 19, 2025 | 📄 SightSound-R1 is now available on arXiv! In this work, we propose a framework to transfer reasoning from VLMs to LALMs. |

| Aug 07, 2025 | 🎉 Thrilled that our paper was accepted for an oral presentation at EMNLP 2025! |

Selected Publications

- EMNLP

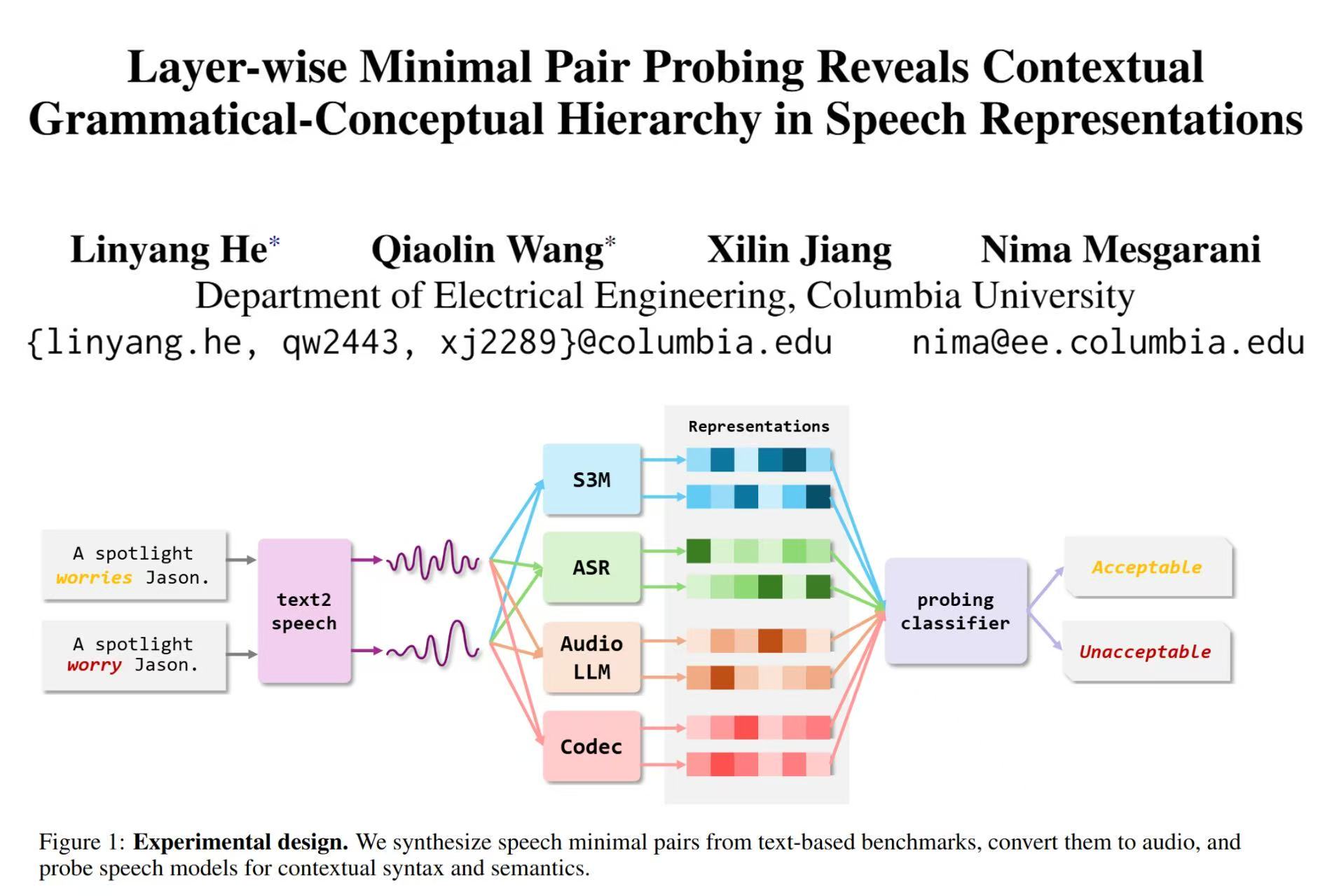

Layer-wise Minimal Pair Probing Reveals Contextual Grammatical-Conceptual Hierarchy in Speech RepresentationsIn Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing, 2025Oral presentation

Layer-wise Minimal Pair Probing Reveals Contextual Grammatical-Conceptual Hierarchy in Speech RepresentationsIn Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing, 2025Oral presentation - arXiv

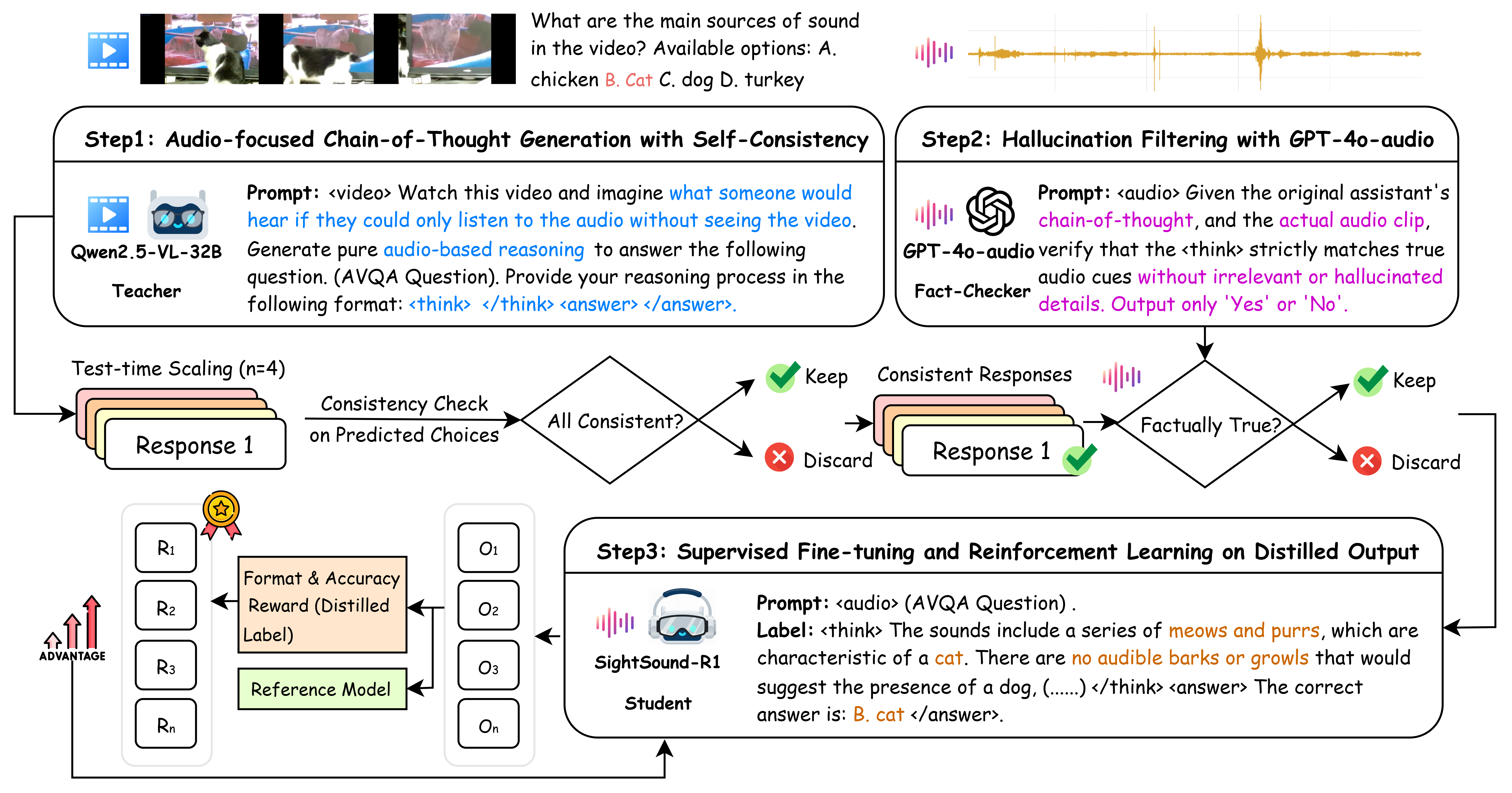

SightSound-R1: Cross-Modal Reasoning Distillation from Vision to Audio Language ModelsarXiv preprint arXiv:2509.15661, 2025

SightSound-R1: Cross-Modal Reasoning Distillation from Vision to Audio Language ModelsarXiv preprint arXiv:2509.15661, 2025